In a few seconds what can you tell about people… or websites?

In a few seconds what can you tell about people… or websites?

Some famous research has shown that student evaluations given after only a few seconds of video[pdf]are indistinguishable from evaluations from students who actually had the professor for an entire semester!

There has been some relevant research on the importance of immediate website actions and impressions:

Visual Appeal: Impressions of a homepage’s visual appeal and aesthetics happen within milliseconds[pdf]

5-second tests: Give users five seconds to look at an image or page-design and you get instant feedback on salient elements or problems in a design. If users can’t find their way or orient to your design immediately, then this can be an early indication the design needs improving.

First-Click Test: The first click users make on a webpage is an excellent predictor of whether they will successfully complete the task

But what about task-level usability? While our visceral reactions to static images or teaching-styles might be reliable, would it hold up for a typical usability test?

Traditional Usability Tests Last Hours not Seconds

A lot of time and effort goes into planning a usability test. In a typical lab-based test, users spend several minutes (sometimes hours) on a website attempting tasks. In a remote unmoderated test users have more distractions and typically less motivation to focus on a website for long periods of time. However, users still typically spend 10 to 30 minutes working through tasks.

Attempting tasks familiarizes users with the website architecture and usability. The process primes users to then answer post-test usability questionnaires such as the System Usability Scale (SUS) which provides an overall numeric picture of the usability of a website.

If we consider the SUS as a reliable measures of website usability, is five seconds enough to answer questions such as “I found the website unnecessarily complex” or “I thought the website was easy to use” ?

7 Websites and 256 Users Later…

To find out I set up unmoderated usability tests across seven websites.

- 4 airline websites: AA.com, UAL.com, Southwest.com, JetBlue.com

- 3 retail websites: CrateandBarel.com, ContainerStore.com, Pier1.com

Users were recruited on the internet and asked to complete two core tasks then answer the SUS at the end of the test. The same airline tasks were used across all four airline websites(finding the price of round-trip tickets) and the same tasks were used across the three retail website (locating products and finding store hours).

I created three testing conditions to understand the effects of limited testing time on SUS scores. Users were randomly assigned to one of three conditions:

- 5 seconds

- 60 seconds

- No time limit

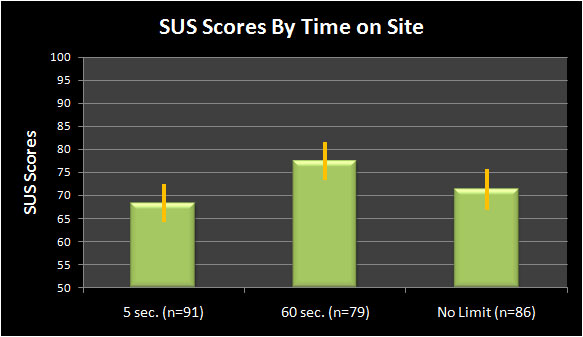

In total I tested 256 users approximately balanced between the 7 websites and 3 conditions. There were between 79 and 91 users in each test condition and between 36 and 42 users on each website.

Results

Interestingly enough, the perception of website usability from the 5 second condition was statistically indistinguishable from the no time limit condition. The observed difference in average SUS scores was less than 3 points (4%).

Despite frequent comments from users like “I wish I had more time on the site” their SUS scores were similar to users who spent a lot more time on the site.

The 60 Second Phenomenon

Somewhat surprisingly, the SUS scores from the 60 second group were between 8 and 12% higher than the 5 second and unlimited time groups (p <.01).

I’m somewhat puzzled by this result and am unsure why users who are interrupted after one minute tend to rate websites as more usable than users who have only five seconds or an unlimited amount of time. One hypothesis is that these interrupted users have an inflated sense of accomplishment and assume they would complete the task and thus rate the website higher on the SUS.

Prior website exposure doesn’t affect the patterns

Perhaps users already have preconceived notions of usability from prior visits to the websites. I used large public facing websites so it would make sense that a good proportion of users had some exposure. Fortunately I asked users how many times they’d visited each website. Across the websites, 70% of users reported having never visited the site before.

When I compare the SUS scores for just these first-time users the same pattern does hold. The difference between the five second users and full-time users was larger (8%) but still not statistically significant (p >.11).

The 60-second group again rated the websites highest—10 to 19% higher than the no limit and 5-second groups respectively. Again this confirms the bizarre result.

Limitations

There could be a few reasons for these results. For example, the tasks I’ve used could be too homogeneous in their difficulty. Perhaps harder tasks would affect the ratings more. Also, a good portion of users might have been able to complete the tasks in under 60 seconds–thus making the website seem easier than users who had to complete two tasks. More research is needed to understand the effects of task difficulty, the number of tasks and task-length on the effects of SUS scores.

The results of this analysis do however suggest that under typical conditions, perceptions of usability are formed within seconds of viewing a website.

Giving users only five seconds to complete tasks generates SUS scores that are very similar to SUS scores from users taking 5 to 15 minutes to complete tasks. Usability, as measured by standardized questionnaires appears also to be strongly affected by initial impressions.