Usability testing is artificial.

Usability testing is artificial.

We do the best we can to simulate a scenario that is as close to what users would actually do with the software while we observe or record them.

However, no amount of realism in the tasks, data, software or environment can change the fact that the whole thing is contrived.

This doesn’t mean it’s not worth doing.

You still get a ton of valuable data and uncover problems that, if fixed, will likely lead to a better user experience.

However, it’s important to understand the subtle biases that creep into moderated and unmoderated usability tests:

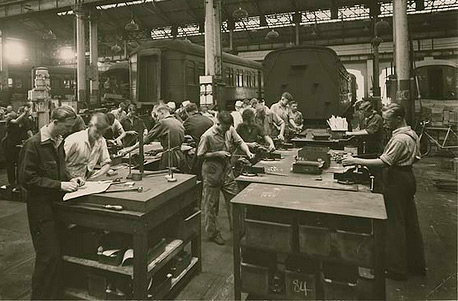

- Hawthorne Effect: “You and all those people online and in the next room are watching my every keystroke, I’m going to be more vigilant and determined than I ever would be to complete those tasks—I’ll even read the help text.” When users know they’re being observed they tend to exhibit slightly different behavior than normal. This so-called Hawthorne Effect comes from some experiments conducted from 1927 to 1932 at the Hawthorne Works factory on improving worker productivity. Experimenters found higher productivity not due to changes in environmental conditions (as predicted), but because the workers were simply being observed (at least this is how the results were later interpreted). It’s often called the observer effect and several of the subsequent biases fall under this umbrella.

- Task-Selection Bias a.k.a. “If you’ve asked me to do it, it must be able to be done”: We create tasks for users to complete. I’ve never created a task that was not possible to complete—it’s potentially unethical and probably doesn’t even make sense. But the user knows this, too. If you’re asking users to find something or attempt to accomplish something, they assume it is possible. Outside the usability test though, users have their own goals and don’t know if a product is sold on a website or if a function is possible in the software. I attribute this bias to Rich Cordes from IBM, and Jim Lewis mentions this same bias in Current Issues in Usability Evaluation (p.345-6) [pdf].

- Social Desirability : Users generally tell you what they think you want to hear and are less likely to say disparaging things about people (seen and unseen) and products. This often means users blaming themselves (not the product) if they have a problem but it can also impact preferences. In a recent usability study, we had users try to complete tasks on a software product. We asked them to imagine they actually had the software installed on their computer and asked how likely they would be to purchase it from the trial. Over 90% of our users said they would, yet data from the company shows no more than 10% actually convert from trial-to-purchase in real life. Our solution? We have data from three competitors so we can make relative comparisons on the purchase rates and pay less attention to the actual rate.

- Availability: If a user has two hours during the day to volunteer for a study, it limits the users you are testing to those who are available. This can be an issue if your actual users are difficult to recruit—engineers, physicians or other skilled workers—who can’t easily take time off work. Our best workaround for this inevitable problem is use remote sessions when possible to reduce travel times. We also have an after-hours moderator so we can test on nights and weekends, and we’ve found we’re able to increase our chances of getting those harder-to-test users.

- Honorariums: Paying people for their time makes sense, but if the honorarium the user receives is the sole motivator, the quality of the data can be questionable. This is especially a concern for unmoderated studies where we’ve found between 2% and 20% of users will cheat to receive the honorarium. There isn’t an easy solution to this issue. For moderated sessions in our lab we pay more (typically between $50-$150) for studies lasting 30 minute up to 2 hour studies. For unmoderated sessions, we pay based on the amount of time (typically between $10 and $30). If you pay nothing, you run into the problem of not recruiting enough users. However, panel provider OP4G has a model where their participants donate an honorarium to charity helping mitigate this pay-for-test bias.

- “If you’ve asked me about it, it must be important“: We often probe users on certain actions, selections or things they’ve said. Often users don’t have an opinion or are unsure why they did things. When we ask users about it, we might be summarizing irrelevant information; similar to polling people on an issue which they have no knowledge of, people will still give you an opinion.

- Note Taking: I’ve had users say, “I see you wrote something down after I did that so I must have done something wrong.” Users who are aware the moderator is taking notes and observing behaviors may become more self-conscious about the actions they are taking, and feel they are being scrutinized or judged. This could affect the user’s decisions and behaviors because it might make them uncomfortable since people are not accustomed to being monitored. We often find that recording observations and notes with a pen and paper is the most discrete method to avoid distracting keyboard clicking in moderated studies.

- Tech-Savvy: Most of the testing we do is with a computer or mobile device. Using these devices because your son-in-law told you to, or because you need to, probably means you’re not volunteering to spend 2 hours trying to do things in a usability study. For a user population that’s less tech-savvy, we do the best we can to observe users who are less inclined to respond to requests to participate.

- Recency & Primacy Effects: The Recency Effect is the tendency to weigh recent events more heavily than earlier events. Conversely, when events that happened first are weighed more heavily, it’s called the Primacy Effect. In usability testing, this is most commonly seen with tasks and product order. Users typically perform worse on their initial tasks (as they get accustomed to the testing situation and being observed). When testing multiple interfaces or products, the most recently used product may have more salience in the user’s mind when they are asked to select their preference. The best way to minimize primacy and recency effects is to alternate the presentation order of the tasks and products.

Every study has bias, but bias alone isn’t reason to dismiss the data. In fact, most of the information we have from psychological studies comes from a highly homogenous group of college students who are likely taking a psychology class and are doing so under the promise of extra credit. Despite this shortcoming, we still are able to learn a lot about how things like new teaching methods can impact human performance and attitudes.

In usability tests, like psychological studies, the focus is on comparing performance over time, or against another design or product that provides the meaning. Reducing biases where possible is helpful for improving the credibility and quality of the data.

Having recordings and notes of user sessions allows us and others to review hypotheses and check our conclusions for biases. In fact, in the years since the famous Hawthorne studies were conducted, many researchers have re-examined the original data and have come to different conclusions on what improved the observed workers’ performance. While research into the generalizability of observer effects remains a topic of research, one thing is clear: biases can never be entirely eliminated; so being aware of them and communicating their potential impact on decisions is often the best remedy.