Rating scales are used widely. Ways of interpreting rating scale results also vary widely.

Rating scales are used widely. Ways of interpreting rating scale results also vary widely.

What exactly does a 4.1 on a 5 point scale mean?

In the absence of any benchmark or historical data, researchers and managers look at so-called top-box and top-two-box scores (boxes refer to the response options).

For example, on a five-point scale, counting the number of respondents that selected the most favorable response “strongly-agree” fall into the top box.

| Top-Box | ||||

| Strongly-Disagree | Disagree | Undecided | Agree | Strongly-Agree |

| 1 | 2 | 3 | 4 | 5 |

Dividing this top-box count by the total number of responses generates a top-box proportion.

The idea behind this practice is that you’re getting only those that are expressing a strong attitude with a statement. This applies to standard likert item options (strongly disagree to strongly agree) to other response options such as from “definitely will not purchase” to “definitely will purchase.”

Top Two-Box Scores

Top-two-box scores include responses to the two most favorable response options. On five point likert-type scales this would include all agree response (“agree” and “strongly agree”).

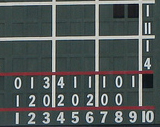

Top-two-box scoring is popular for rating scales with between 7 and 11 points. For example, the 11 point Net Promoter Question “How likely are you to recommend this product to a friend” has the top-two boxes of 9 and 10.

| Top 2 Box | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

all Likely |

Likely |

|||||||||

The top-two-box responses are called “promoters,” and responses from 0 to 6 (bottom 7) are called “detractors.” The Net Promoter Score gets its name from subtracting the proportion of detractors from promoters.

The appeal of top-box scores is that they are intuitive. It doesn’t matter if the ratings are about agreeing, purchasing or recommending. You’re basically cutting to the chase and only considering the highly opinionated folks.

Top-Box Scoring Loses Information

There are two major disadvantages to this scoring method: You lose information about both precision and variability. When you go from 7 response options to 2 or from 11 to 2, a response of a 1 becomes the same thing as a 5. Information is lost.

Losing precision and variability means it’s harder to track improvements, such as changes in attitudes after a new feature or service was launched.

For example, the following 42 responses to the Net Promoter question came from a usability test of the American Airlines website (aa.com).

| Response Category | Raw Response | #of Responses |

|---|---|---|

| Detractors | 0, 0, 0, 2, 3, 3, 3, 4, 5, 5, 5, 5, 5, 5, 5, 5, 5, 6, 6, 6, 6, 6 | 22 |

| Passive | 7, 7, 7, 7, 7, 7, 8, 8, 8, 8, 8, 8 | 12 |

| Promoters (Top 2-Box) | 9, 9, 9, 10, 10, 10, 10, 10 | 8 |

Table 1: 42 Actual responses to the question “How Likely are you to recommend aa.com to a friend?

The top-two-box score is 8/42 = 19%. There are 22 detractors (0 to 6) so the Net Promoter Score is a paltry -14/42 = – 33% (which seems consistent with some recent criticism)

Now, let’s say American Airlines hires the best UX folks to improve the website and the following new scores are obtained after the improvement:

| Response Category | Raw Response | #of Responses |

|---|---|---|

| Detractors | 4, 5, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6 | 22 |

| Passive | 7, 7, 7, 7, 7, 7, 8, 8, 8, 8, 8, 8 | 12 |

| Promoters (Top 2-Box) | 10, 10, 10, 10, 10, 10, 10, 10 | 8 |

Table 2: 42 Potential responses to the question “How Likely are you to recommend aa.com to a friend? after a hypothetical change in design.

The only difference here is the reduction in the number of respondents who completely hated the website (0’s, 2’s & 3’s) and three 9’s changed to 10’s.

Yet the top-two box score is still 19% and the Net Promoter Score is still – 33%. There were no differences in the box scores because the changes all occurred within categories.

Use Means & Standard Deviations

In the behavioral sciences, marketing and certainly applied research, it is acceptable to take averages and standard deviations of rating scale data. Only measurement purists will take issue with this practice.

The average rating on the aa.com website before the changes was 6.12 with a standard deviation of 2.71 (those are actual scores from 42 users). After the hypothetical changes, the average increased 16% to 7.12 with less variability, having a standard deviation of 1.64 (those are realistic but fictitious scores).

The improvement in scores is statistically significant (t = 2.05 p = .045) despite there being no difference in top-box and Net Promoter Scores.

Top-box and top-two-box scoring systems have the benefit of simplicity but at the cost of losing information. Even rather large changes can be masked when rating scale data with many options is reduced to two or three options. It can mean the difference between showing no improvement and a statistically significant one.

Top-box scoring has its place for quickly assessing results and especially for stand-alone studies when there’s no meaningful comparison or benchmark. If the results ever get compared though, you’ll want a more precise scoring system to have a good chance of detecting any differences in attitudes from design changes.