The user puts the u in UX.

The user puts the u in UX.

What defines UX in general and usability in particular, is the observation of people interacting with products–software, hardware, and websites.

For decades, UX professionals have worked to convince executives and product managers of the importance of involving users in the design and evaluation of product experiences.

We stress the necessary difference between usability testing and traditional market research activities (such as surveys and focus groups) which focus more on attitudes and predilections rather than on the behavior users’ exhibit as they use an interface.

Understandably, UX researchers are skeptical towards data that comes from analysis (such as surveys) which claims to measure the user experience. They ask, how can you talk meaningfully about your users’ experience if you didn’t actually observe any users?

As a usability professional who monthly runs thousands of participants in two labs, I understand this skepticism. I’ve seen many marketing or engineering teams too often rely on surveys or focus groups as user “acceptance” testing and treating it as the same thing as a usability test.

So can you evaluate the user experience without observing users?

The answer is a qualified yes. Under certain circumstances you can get useful data if you use suitable proxies for direct observation of user behavior: retrospective accounts, heuristic evaluation, and keystroke-level modeling (KLM).

Retrospective Accounts

A retrospective account involves having a user reflect on a recent experience with an application. The user experience is a mix of user attitude (affinity, comfort, loyalty) and action (task completion and task time). Attitude does not account for the entire user experience, but it does reflect the presence of problems with behavior. That’s why we collect attitudinal data (post-task and post-study questions) as part of a usability test in which we observe users. We use these measures of attitude to supplement the behavioral data collected from observing users as they attempt tasks.

We usually ask these attitudinal questions minutes after a task is completed. We could instead get a retrospective account by asking them, using a survey, many months later. Retrospective accounts don’t provide immediate feedback about the test-environment experience, but they do tell you about your users’ responses to their real-world experience of your product interface.

For example, we routinely release benchmark usability data, like the airline and aggregator data. We obtain this data by recruiting a representative sample of participants and asking them to reflect on their most recent experience using a standard set of questions. They are the same set of attitudinal questions we ask during a task-based usability study. One of these is the SUPR-Q, a standardized questionnaire for measuring perceptions of usability, appearance, loyalty, and trust.

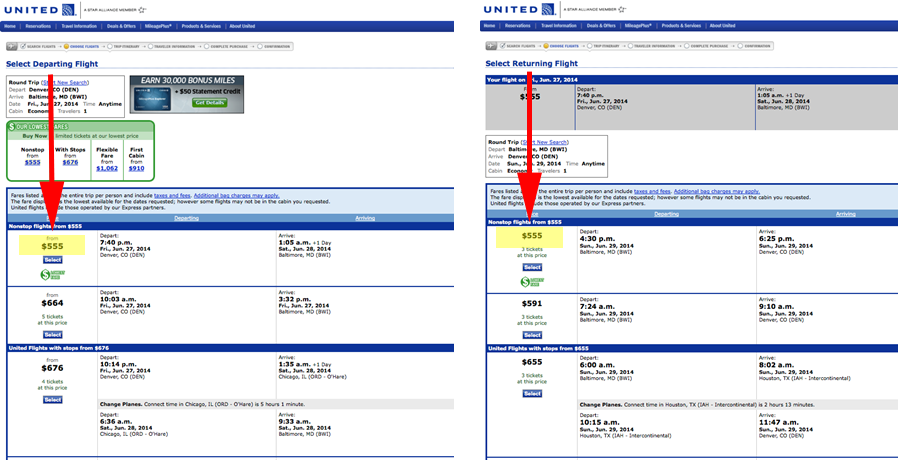

We found, for example, that the United Airlines website had the lowest ratings for website usability. These scores were derived not from observation but from asking people about their real-world experiences of the previous six months.

As part of the study we asked participants whether they encountered problems and to describe them. One participant in the United study said:

“The search results for flights can be confusing; I like seeing departing flights from origin city and destination city at the same time. It’s unclear what the final price will be because it gives individual price points for each flight, yet these prices aren’t actually combined for a final cost.”

The metrics from the retrospective account suggested that the United Airlines website provided a below-average user experience, and the comments from participants gave us some idea why.

In a separate study, we had participants do a traditional task-based usability test on United, Southwest, and American Airlines websites, and we saw, as shown in Figure 1, evidence of the problems described by the participant in the retrospective study. These metrics corroborated the findings of the retrospective data, which, among other things, indicated that participants favored Southwest’s website 2 to 1 over United’s.

Figure 1: The United Airlines website prices segments separately, which is good for understanding how each segment affects a price, but leads to confusion over what the total price is. This was a problem reported by a user from a retrospective survey and then observed later in a separate usability test.

This illustrates both the strengths and limitations of retrospective accounts: we can use them to derive metrics that describe the experience, but since we can’t use them to identify the specific UI problems, we can’t know exactly what to fix.

Interestingly enough, users can be a good source for identifying usability problems. One study found that on average users were able to report around half the problems trained usability professionals found.

Heuristic Evaluations

You can use a heuristic evaluation to evaluate the user experience without observing user behavior directly. In a Heuristic Evaluation, an expert reviews the interface against a set of guidelines or principles (heuristics), which provide a template to uncover problems that a user will likely encounter. This method provides a way to review an interface in addition to usability testing or when usability testing isn’t feasible (because of budget constraints, lack of time, or lack of available participants).

We’ve found that heuristic evaluations identify around a third of the problems that are uncovered during a task-based usability test. The effectiveness increases with more evaluators and with evaluators who have domain knowledge and familiarity with the interface.

Keystroke Level Modeling

You can also use Keystroke Level Modelling (KLM) to evaluate the user experience without direct user observation.

Almost thirty years ago researchers at XEROX PARC and Carnegie Mellon developed this method to estimate experienced user task-times. KLM is part of the cognitive-modeling technique called GOMS (goals, operators, methods, and selection rules), as detailed in the seminal usability book The Psychology of Human Computer Interaction by Card, Moran and Newell.

Card, Moran and Newell brought in hundreds of users and had them complete tasks repeatedly. They decomposed large tasks like typing a letter or using a spreadsheet into millisecond level actions (called operators). They found that just a few of these operators can construct almost any task a user does on a computer.

In the ensuing decades several researchers replicated these findings and tweaked some of the times by a few milliseconds. Using KLM, you can predict a skilled user’s task time (error-free) to within ten to twenty percent.

KLM is ideal when user efficiency (time) is paramount. This involves situations where users perform the same tasks repeatedly and where, consequently, a savings of a few seconds per operator can add up to significant savings (and reduced frustration) over time.

Conclusion

Nothing can replace directly observing users as they perform tasks. But certain proxy techniques–like retrospective accounts, heuristic evaluations, and KLM–can produce useful data based on patterns or recollections of user behavior. Each tool has its strength and weakness, and each has a place in the UX researcher’s toolbox. Each provides limited but useful insights into the user experience.