How are you reading this page? Are you at work? At home?

How are you reading this page? Are you at work? At home?

Are you checking your phone or email as you read?

Are you eating? Are pets or family members nearby?

Although we rarely interact with websites or software in isolation, without distractions, for decades when we spoke of usability testing, we pictured a quiet room with a two-way mirror hiding the observers, who communicated via a voice-of-god microphone.

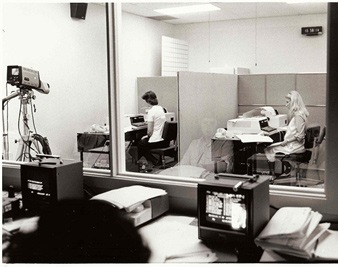

The lab was expensive, the equipment was expensive, and the method was time-consuming. It’s no surprise that usability testing lay in the domain of deep-pocketed corporations like IBM or Bell Labs. It was like a scene out of Stanley Milgram’s lab. “Act naturally, and pay no attention to the men in blue suits behind the glass curtain.”

Figure 1: An IBM usability lab in the late 1970s.

Usability Testing Goes Remote

With the recent adoption of unmoderated remote testing methods, usability testing can be done for a fraction of the cost and time. Unlike a traditional usability test that requires a facilitator and participant attend a session at the same time (and usually same location), unmoderated sessions allow participants to complete a study at any time and without the need of a facilitator. Internet panel companies, like Op4G, Toluna, and SSI, can deliver thousands of qualified candidates to participate in a remote usability test. You can recruit almost any type of user, from small-business owners to hardware engineers to physicians.

Can we trust the data produced by this type of test? In one examination of the difference between methods, we compared the data collected from lab studies to the data from an unmoderated study. Many metrics were surprisingly similar (like completion rates and SUS scores), while others show differences that need to be explored further (especially task time).

One shortcoming of the unmoderated services is that it doesn’t show us what users are doing– their facial expressions, their postures, their environments. We can look at click paths, metrics, heat maps, and verbatim comments, but these things don’t enable us to easily diagnose usability problems. Therefore, we use unmoderated testing largely for benchmarking.

The New Face of Testing

Recent innovations in technology can give us a better idea about who these test subjects are and what they’re doing. We can see and hear the users and record their screens as they perform tasks. We recently used a new audio and video feature from UserZoom that shows the study participants’ faces via their webcams. Figure 2 shows snapshots of what we saw.

In addition to giving us diversity in ages, gender, and geography by recruiting participants from around the US, this technology makes clear that participants aren’t necessarily sitting undistracted, attending to the research. We see pets, food, music, television, family. Clothing seems to be optional.

Figure 2: The faces of participants as they attempt tasks in an unmoderated remote usability test.

UserZoom isn’t the only technology provider to bring us the face of the user. Usertesting.com, TryMyUI, and now YouEye have testers trained to think aloud as they attempt the tasks. However, it’s the untrained users who likely provide a more natural representation of the faces behind the data.

Balancing Internal and External Validity

The antiseptic environment of the usability lab gives us control over variables that make it difficult to understand what in the application needs to be fixed. This control improves what’s called the internal validity of our study.

For example, when participants in a lab-based study attempt tasks on competitive interfaces, we largely control nuisance variables like Internet speed, browsers, operating systems, and monitor sizes that can confound the results. This allows us to be confident in the causes of good and bad experiences. But the price of high internal validity is often low external validity–so while we may understand the causes in our controlled lab, it’s more difficult to generalize what we see because users in this environment aren’t necessarily reflecting the reality of how users interact with a website or software.

So which is the more accurate picture of the user experience–the controlled lab study or the loose, unmoderated study? The truth lies somewhere in between.

Like experiments in general, good user research needs to balance the internal and external validity of the study by using both methods. The advances in technology enable us to more efficiently collect data from users in their natural environments. But the reduced time and cost of data collection does not mean a sloppy study design. The more accurate picture of users is painted by research that balances the tenets of good experimental design with the new means of data collection. While research into the reliability of panel research continues to evolve, we can now put a face to all that data!