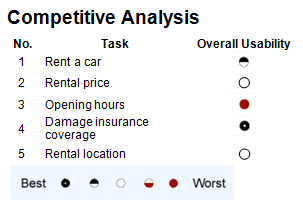

Which product is the most usable?

Which product is the most usable?

One of the primary goals of a comparative study is to understand which product or website performs the best or worst on usability metrics such as completion rates or perceptions of usability.

Comparisons can be made between competitive products or alternate design concepts.

When conducting a comparative usability study, a number of variables make the setup more complicated than a standalone usability study.

This makes comparative studies more like classic experiments than corporate research projects.

While you probably never have conducted a clinical trial involving both test drugs and a placebo, you’ve almost certainly benefited. There are a number of factors that need to be accounted for in clinical trials so that the apparent efficacy of a drug within a treatment group isn’t due to this group being disproportionately healthier or physiologically different than the control group to which a placebo is administered. These unintended factors in each group are referred to as “nuisance variables.”

The same principle applies to conducting a comparative usability study. Taking individual differences into account is important to avoid erroneous conclusions about the superiority of a product or website over another. In human-computer interaction, the variability between user abilities often outweighs the differences between the designs. We therefore want to be sure that higher scores we see for one product are not due to other variables about the users participating in the study.

While nuisance variables vary by product and domain, here are five common variables we encounter that should be collected and managed in the analysis.

- Prior Experience: One of the most important variables that will influence how users perform in a usability study is their prior experience with the application (website or software). Users with the most experience tend to have higher completion rates and complete the tasks more quickly than users with less experience. This prior experience also affects the users’ attitudes toward the experience and therefore, attitudinal data also is also more favorable from users with more experience.

- Brand & Product Attitude: Users can have a lot of experience with a website or product, but nonetheless have a very strong negative opinion of the company (brand) or the specific product. When recruiting participants, we often recommend screening out the “haters”–participants who so despise a company or product that their data is hardly reliable. Regardless of how you handle haters, you should collect some measure of favorability or unfavorability toward the brand and product. For example, if you are testing the usability of retail websites, you’ll want to know how people feel toward, say, Target, Walmart and Best Buy and then manage the differences in the analysis (see below).

- Domain Skills: For products that require specific domain knowledge or specialized skills, the participants’ level of experience affects their performance. Their architecture drafting skills, bookkeeping skills or technical proficiency usually has more of an effect on task performance than differences in interface or design elements. Where domain skills matter, have some way of recording the differences.

- Confounding Experiences: If your company builds a product that’s embedded in another product or website (such as a shopping cart or payment form), you need to account for the effects the parent product has on this contingent child experience. For example, if you want to know if users find your checkout form usable and want to test it on a live website, the parent website where users will browse for items will have a carry-over affect on the form. If the performance is bad, it could be from a bad browsing experience and not a bad checkout experience. Testing multiple parent experiences and understanding how much the parent experience helps or hinders the child experience mitigates this effect.

- Product Order: If participants will be interacting with multiple products or websites in the same session (called a “within-subjects study”), then it’s important to alternate the order in which the participants test the products. This technique is called counterbalancing and ensures that the same percentage of participants is exposed to Product A first and to Product B first. There are a number of things that can be affected by the order. Sometimes, it’s a practice effect as users get “warmed-up” or get fatigued from being in a session for an hour. By counterbalancing the order, you ensure that these undesirable effects are evenly spread to all the products being tested and don’t disproportionally affect one product more than another.

Addressing Nuisance Variables

To both understand and mitigate the effects of these undesirable variables on the outcome measures, there are generally three things you can do.

- Balance Variables: This is usually the stakeholders’ first instinct as it’s the most intuitive. This involves identifying the variables that affect performance (e.g. prior experience and brand attitude) and ensuring that an equal percentage of participants are tested with each product or experience. This, however, can be a challenge as it takes more time and costs more to recruit participants proportionally. It’s also often not possible to find enough people who have, for example, never purchased on Amazon.com but use an iPhone regularly.

- Separate & Weight: With balanced or unbalanced subgroups, you can run statistical comparisons for each subgroup on the nuisance variables of experience and attitude. For example, divide the participants into low and high experience or favorable and unfavorable attitude and see how the metrics differ, if at all. With separate scores for the subgroups you can then create a weighted composite score. For example, if you know 60% of users have more than 5 years of experience with your product but your sample had only 44% of such users, you can weight the scores accordingly.

- Statistically Control: There are a few statistical techniques that allow us to partial out the unwanted effects. One of the most common is something called an ANCOVA (Analysis of Covariance). An ANCOVA is a special form of the more familiar ANOVA (Analysis of Variance). An ANOVA will tell you which product is statistically different from another. An ANCOVA does the same thing, but controls for the unwanted variables, called covariates. It identifies how much of the variability between groups can be accounted for after factoring out the effect of covariates (such as prior experience or brand attitude). ANOVAs and ANCOVAs both involve assumptions and require some expertise and software so you’ll want to have some professional assistance if running these.

A comparative usability evaluation requires more planning and thought to account for unwanted variables than a stand-alone study. Carefully setting up the study and analyzing the results is important if you plan on publishing your results, or if the findings have significant consequences. When in doubt, measure as many variables that could affect the validity of the findings as possible, because there is a lot that can be done to minimize unwanted effects. Call us when you get stuck!